How Did We Train Large Language Models Without Human Feedback?

A High Level Timeline of the Way We Train Large Language Models

1. Introduction

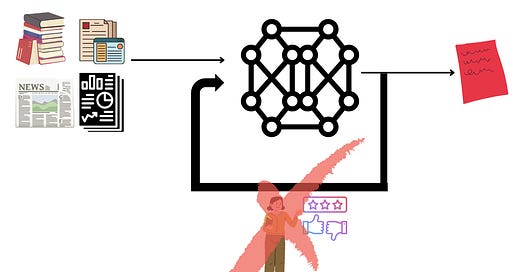

Over the past couple of years, the way we trained language models (LMs) evolved significantly. The goal is to be able to create a model with good performance on multiple language tasks and the trend had been moving towards finding a training paradigm that requires little to no fine-tuning after training the model over a large, diverse corpus. However, in the last year or so, this trend has shifted. If you have been following the tech news lately, you have probably heard of OpenAI’s new chatbot called ChatGPT. Yes, it can do pretty amazing things, but it is important to keep in mind that it works really well mainly because a lot of humans (annotators, users, and the like) keep giving it feedback! This feedback is cleverly incorporated into the model training via a relatively new training paradigm called reinforcement learning with human feedback (RLHF).12 Assuming that the annotators can provide reliable feedback and that we have enough annotators, these models can chat about almost anything! You can also try it for yourself! We will talk about how RLHF works in detail in another blog post, which is coming out soon. Stay tuned!

For now, let’s reminisce about how one would train LMs without human feedback!

2. Training Strategies

We will walk down memory lane, and talk about the way we trained large (deep) language models without human feedback. These represent my personal experience with LLMs and NLP, so if you have suggestions or if there are other moments in the model training history that you think should be highlighted, please leave a comment!

2.1. Task-Specific Models

During the earlier times of LLMs, we were just discovering that if we train a deep enough neural network with a sufficiently large dataset pertaining to one particular task, the network was able to learn from the data and would give high accuracy. These were much simpler times when each model only achieved one task. This was the pre-transformers era and the best results in NLP were achieved with recurrent neural networks. An example model of this era is GNMT. Do you remember when Google Translate transformed from being a dictionary alternative to a decent translator so suddenly in 2016? GNMT is behind that success! It uses a deep LSTM network and reduces translation errors by 60% compared to the statistical model in production at that time.

It is also worthwhile to mention that in this era, finding efficient and transferrable (across tasks) representations of the word-based data, namely embeddings, was an important concern because it quickly became tedious to manually extract task-specific features as dataset sizes grew. Word2Vec, which is one of the most popular word embedding algorithms, came out during this time in 2013.

2.2. Pretrained Task Agnostic Models + Finetuning with Data

Transfer learning refers to the concept of transferring the knowledge gained by solving one problem in order to solve another, albeit related problem. and it has been around since the late 70s. Applying it to language tasks required a large technological infrastructure and computing power due to the complex nature of these problems. As technology advanced, we became able to train large models, that can reasonably transfer knowledge. The concept called pre-training which we so often use in the context of NLP refers to one specific type of transfer learning called sequential transfer learning. In this type of transfer learning, a large language model is first trained on a very large corpus without any specific task in mind (“pre-trained”), and then is “fine-tuned” by training on a task-specific dataset.

In 2015, Dai et al. achieved intriguing results using pre-training, which was presented in the seminal paper called Semi-supervised Sequence Learning. In this work, the authors use a sequence auto-encoder for pretraining the weights and then use these weights at the initialization for an LSTM model. The clever idea here is that the sequence autoencoder is an unsupervised model with the goal of reconstructing the input sequence. Thus, it can be trained with unlabeled data, which is easier to supply. The authors then take the pre-trained autoencoder weights and then train them on sentiment analysis and text classification datasets, both of which are supervised tasks. They report a significant performance increase over randomly initialized LSTMs and this suggested that unlabeled data can improve the performance of supervised tasks.

After the transformers were introduced in the marvelous paper called “Attention Is All You Need” in 2017, models with better generalization capabilities were created and pre-training became even more prominent. Researchers from OpenAI published a paper called “Improving Language Understanding by Generative Pre-Training” and demonstrated that pre-training a transformer-based model with a diverse corpus and then fine-tuning it with a discriminative (task-specific) corpus gives outstanding results. This result is followed by the infamous BERT model developed by Google. BERT gave state-of-the-art results when it came out on eleven different language tasks, which clearly demonstrated the power of the pre-training+fine-tuning paradigm with transformer-based models! Following these results, task-specific models became completely obsolete and the concept of pre-training a transformed-based model using a diverse corpus and then fine-tuning it with task-specific datasets became pretty much the standard way to train NLP models.

One caveat of this paradigm is that it does not completely eliminate the need for task-specific datasets. If one wants to use a pre-trained model for a new task, one must still collect a significant amount of high-quality data (albeit smaller in comparison to the dataset/s used during pre-training). Wouldn’t it be so nice to get rid of this requirement and just ask the model what we want and expect it to immediately get back to us? These questions led researchers to meta/multi-task learning!

2.3. Multi-Task Learning & Meta-Learning

Both multi-task learning and meta-learning are paradigms for creating models that perform well on a multitude of tasks. Even though these concepts are closely related, there are some differences between them.

2.3.1. Multi-Task Learning

Multi-task learning is the learning paradigm in which the model is concurrently trained for multiple (related) tasks by sharing representations between different tasks. The advantage of this method is that the models trained this way are shown to have good generalization properties as they are focused solely on one task. There are two general ways to perform multi-task learning:

Soft parameter sharing: Each task has its own model. During training, the parameters for each of these models are penalized according to the distance between them. This way, these parameters are forced to be similar and learn from each other.

Hard parameter sharing: In this mode, all the tasks share the hidden layers of the network, but each task has its own output-specific layers. This is a more common method compared to the first one. An example of this is Google’s MultiModel, which performs well on many different tasks from many different domains, including NLP and image recognition.

2.3.2. Meta-Learning

a) Generic few-shot learning

Meta-learning is usually referred to as learning to learn. The goal is to train a model such that it can solve new tasks that it did not learn during the training process by just looking at a few examples of the task. If you think about it, this closely resembles our learning processes as humans. Assume that you do not know how foxes and dogs look. Someone shows you a few fox and dog pictures and then shows you one more picture, asking if it is a dog or a fox picture. Now, even without explicitly learning to discriminate them in any other context or at any previous timestep, you can with a very high probability distinguish one from the other on the fly!

Now, let us return to the machine learning context and go over a generic meta-learning algorithm as given in the awesome MAML paper.

Here is that the model gets trained for a bunch of tasks using only K (not a large number) examples per task. The key point is that if the tasks are somewhat related to each other, learning all of them together in this fashion will prevent overfitting and help our model improve its generalization on a multitude of tasks.

b) In-context learning (Few-shot learning in NLP)

In tasks related to natural languages, we have another way of utilizing the few-shot learning paradigm. As demonstrated in the decaNLP paper, NLP models understand commands and context. Specifically, if we have a sufficiently large task-agnostic LM (language model) trained on a very diverse dataset and if we want it to perform a specific task during inference time, we can just input the command pertaining to the task along with some examples (for context) of what the desired input and output would look like and the model will (hopefully) give the desired output. For example, if we want the model to translate the English sentence “Nature language processing is cool" to Turkish using few-shot learning with K=2, our command could look like the following:

Translate from English to Turkish(command)

Potatoes are nice, Patatesler iyidir(input, output pair 1)

School’s out, Okul yok(input, output pair 2)

Nature language processing is cool, …(input, …) → Expect model to give desired output

This is possible only because the model has seen a large amount of data and is able to find the correlation between the command and examples that provide context.

If the models are trained on a colossal amount of data, they actually might not even need the context. OpenAI released two models, GPT-2 and its successor GPT-3, both of which were developed using in-context learning. They showed that it is possible to perform one-shot learning when the model is presented with the command and only one example for context, and zero-shot learning, where the model is given a prompt and no examples. It is important to note that, we do not train the model with these inputs, we just give them to the model and expect an output, which is different than what we would do in the generic meta-learning paradigm.

3. Conclusion

There have been many different ways to train large language models over the course of a few years. We started out with task-specific architectures and trained our models with the hopes of them achieving one task very well. Then, transformers were invented and we realized that if we collect a lot of data from various resources and train the transformer-based language model long enough on all of the data, we can then use this pre-trained model for any task by fine-tuning it with a task-specific dataset. In the last 2-3 years, we also realized that fine-tuning with a large dataset might not be necessary and moved on to in-context learning, where we just showed the pre-trained model some examples of the task that we want it to achieve and it really performed in the way we wanted it to! Up until this point, we kept reducing the amount of work needed to achieve a task with a model, which we covered in this blog post. In the next one, we will cover reinforcement learning with human feedback, the technology behind ChatGPT’s release! This paradigm, as the name suggests, requires tons of feedback from humans, but its capabilities are impressive! Stay tuned!

Additional Reading

I am adding some reading material I found useful here:

ChatGPT uses the same training paradigm that InstructGPT uses, which is another chatbot released by OpenAI earlier this year (2022). In InstructGPT’s paper, there is a section where it is compared with GPT-3, a model with the same architecture as InstructGPT, but did not use RLHF during training. The results clearly indicate the superior performance of InstructGPT when it comes to interacting with humans.