How Does ChatGPT Work So Well?

The main components behind the success of ChatGPT, explained

1. Introduction

The capabilities of language models are rapidly increasing. It seems like it was not long ago when I tried to use Google Translate to get help for my German homework in high school once, and I ended up getting a deficient grade. Now, we have technologies like ChatGPT, which not only can translate pretty well, but can also write code, and short stories, and it can even give you a marketing strategy for your brand. But, how does ChatGPT work so well? This post aims to explore that!

There are three main reasons why I believe that ChatGPT is so successful.1

The amount and quality of the data that the model is trained on

The training method (Reinforcement learning with human feedback)

The vast size of the model

In the remainder of this post, we will explore each one of them.

2. Main Components in ChatGPT’s success

Although OpenAI did not release a paper about ChatGPT, they released a blog post, stating that ChatGPT uses “the same methods as InstructGPT, but with slight differences in the data collection setup”. Luckily, InstructGPT, another language model/chatbot by OpenAI, comes with a paper, which explains all the methods used. Thus, we will refer to that paper throughout the blog post to explain the aforementioned components of success.2

2.1. The size and quality of the dataset

If you are in the field of machine learning, you know that almost everything marvelous starts with diverse, high-quality, and tons of data! ChatGPT is no exception, the data collection, cleaning, and annotation process must have taken months and months of work! Let us dive a little deeper into what kinds of data were collected and how humans took part during data collection besides standard data cleaning tasks.

2.1.1. GPT-3.5: The starting point

The starting point of ChatGPT is GPT-3.5, which does not have an affiliated paper. However, InstructGPT starts out with GPT-3, which is a well-known and successful model by OpenAI. Since GPT-3 has an associated paper, we will go over the data collection process of GPT-3 and we will refer to these models as GPT from now on.

The datasets that are used for the training of GPT are:

A filtered version of the CommonCrawl dataset, which is created by crawling the entire web for over a decade.

WebText dataset that is constructed by scraping the links shared by the Reddit users with at least 3 karma points on various subreddits. This dataset is created by the assumption that the Reddit users with at least 3 karma are indeed humans (not bots) and the links that they share are legitimate. This is the main dataset used in the predecessor model GPT-2.

Two internet-based books

English-language Wikipedia

So, GPT basically used all kinds of legitimate text-based resources on the internet during its training. It is a well-known fact that as the amount and the quality of data the model sees increases, its generalization capabilities increase.3 Thus, even the starting point of ChatGPT, which is a pre-trained GPT model, is very powerful with good generalization properties.

2.1.2. Human-generated data

The pre-trained GPT model is then fine-tuned according to the answers of the annotators. Specifically, annotators either sample some prompts from the set of prompts that were submitted to the OpenAI API earlier or write some prompts, which were not present in the prompt dataset, such as few-shot prompts or harmful prompts. Then, they write responses to them. The process creates a dataset of (prompt, answer) pairs. This dataset is then used to fine-tune the pre-trained GPT.

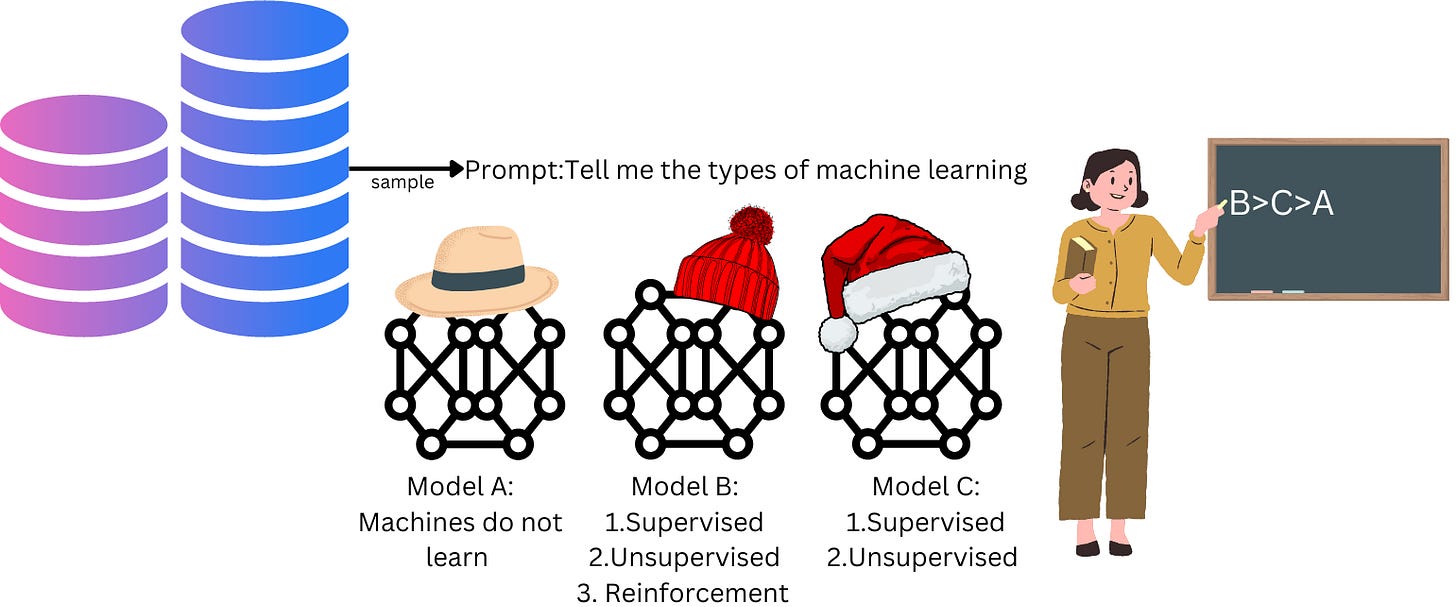

In addition to generating a (prompt, answer) dataset, human annotators also give feedback to model outputs generated given a prompt. Specifically, a prompt is selected from the prompt pool. This prompt is then fed to a couple of models (like GPT and other models) and the responses are saved. They are then shown to human annotator(s), which are asked to rank the quality of the model outputs. These rankings are used as rewards during the reinforcement learning process, which we will get into in the next section.

2.1.3. Annotator Selection & Its Importance

Because both the fine-tuning and reinforcement learning steps include humans in the process, selecting appropriate annotators (also called labelers in the paper) is a crucial task. This is not only a concern with the quality of the outputs. The views of the annotators are highly likely to influence the intent of the model. Thus, if they are biased on certain topics, are not very knowledgeable in detecting bias or harmful language, or do not use appropriate language when writing answers to prompts, the model could learn harmful behavior.

To mitigate any harm that could be done by the annotators, OpenAI created a screening process for the contractors who worked on this project. They hired annotators who i) agreed with the views of the researchers at OpenAI on flagging sensitive speech and ranking several model outputs, ii) were able to write appropriate answers to sensitive prompts selected by the researchers at OpenAI, iii) were self-proclaimed detectors of sensitive speech for some cultural groups.

Two things to note about this process:

The views of the models would be closely aligned with the views of the OpenAI researchers, who could also have some biases and they might not be a diverse enough group to represent the user demographics.

The annotators could lie during the screening process and then cause harm during the training process. This is unlikely as it requires someone to intentionally sabotage the process without getting caught.

2.2. The training method

Traditionally, language models (LMs) are trained with supervision, i.e. using labeled data points during training.4 Even when there were no explicit labels in the dataset, we turned the problem into a supervised one by masking some of the words in the input sentence and asking the model to predict the masked word.5 In the simplest case, we asked the model to predict the next word given a sequence of words. Although we were able to achieve marvelous results with these paradigms, there is still a lot of room for improvement if we want these LMs to have organic, human-like conversations with us.

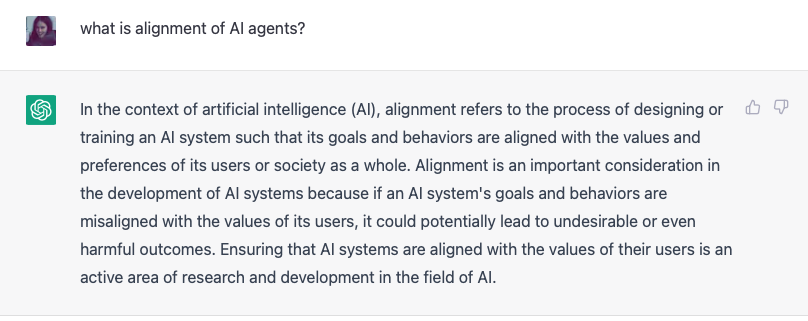

The power of language agents stems from the fact that we can interact with them. Unfortunately, their answers to our prompts might be problematic: they might be biased, toxic, irrelevant, or just plain wrong.6 7 This is mainly caused by the conflict between the modeling objective of LMs, which is to predict the next/masked token on a text scraped from the internet, and the aim of answering appropriately to questions asked by humans.8 The concept of removing this conflict is called aligning the LM with human intentions and the model that lacks this property is called misaligned. One way to make the model more aligned is to somehow incorporate human feedback into the model training process. This is precisely why ChatGPT (and InstructGPT) was (and probably still is) being trained by using a method called reinforcement learning with human feedback (RLHF).

In the remainder of this section, we will first dive deeper into the concept of alignment and why it is tricky, followed by how RLHF can help. Then, we will explore how RLHF works.

2.2.1. Alignment & Its Challenges

Alignment in AI is usually concerned with answering the following question:9

“How do we create an agent that behaves in accordance with what a human wants?”

This is a tricky question because the target of the alignment, the “what a human wants” part, is subjective and very hard to quantify.

It is conceivable that two people from distinct backgrounds will have different values, and thus, wishes (“wants”). For instance, they might be of different cultures and have a different understanding of what would be considered offensive or the way they communicate might be crucially different.10 Therefore, if we choose only one of them and create an agent aligned with that person's values, the agent might be misaligned with the other person's wishes. Therefore, it is important to make sure the agent does not lack alignment with the desires of its users, considering that they might come from different backgrounds. Thus, the first step should be to choose the correct alignment target, which could be a person, a community, a country, or humanity.

After the target is set, we need to quantify how these values could be represented mathematically so our agent can learn from them. It is actually challenging to precisely define and measure what a human wants, even if our target is a single person.11 Moreover, even if the objective is mathematically correct, it might still result in the agent going after secondary objectives to excel at the primary objective. For example, Lewis et al., 2017 trained an RL agent using self-play to negotiate in a dialog. The training process rewarded successful negotiation, which was the correct objective, but it did not penalize for using made-up language. So, instead of negotiating in English and receiving rewards, the agent focused on developing a made-up negotiation language, that was successful against earlier versions of itself (so it achieved high reward), but incomprehensible to humans.

If you want to read more about alignment, go to the link.

2.2.2. How is alignment defined when evaluating ChatGPT?

The developers of ChatGPT aim “to train models that act in accordance with user intentions”, similar to what we have discussed in the previous section.12 Following prior art, they break alignment into three major intentions: helpfulness, honesty, and harmlessness.

To be helpful the model should be able to follow instructions and few-shot prompts. (If you need a refresher on few-shot learning, check out this post.)

To be honest (truthful), the model should make true statements about the world and it should not make up facts.

To be harmless, the model should not cause any physiological, psychological, or social harm to the people and the environment.

Note that the judges of the intentions of the model are human annotators.

2.2.3. Reinforcement learning with human feedback (RLHF)

Because it is difficult to quantify how much an agent‘s intent/behavior is aligned with the target, relying on humans to guide the agents toward alignment seems to be a promising direction. Although it is unclear13 how human feedback would be incorporated into supervised training methods, there have been some works on this topic in the area of reinforcement learning under the name of reinforcement learning with human feedback. This concept is introduced by Christiano et al in 2017 with agents that learn to play Atari games using human feedback. Thus, incorporating human feedback into the training process requires stepping outside of the traditional methods and embracing the realm of reinforcement learning!

The training process starts with fine-tuning the pre-trained GPT model on the (prompt, answer) dataset created by the annotators. Since it is infeasible for the annotators to write answers to all possible prompts that the model could see, we still need a mechanism to teach the model the concept of preferring one possible answer over another and a more clever way to leverage human interaction. This is where reinforcement learning comes into play.

Instead of having the annotators write more (prompt, answer) pairs, they are asked to rank the responses of several models according to their quality and how much they align with the intentions mentioned above. This information is not directly used by the model because the model’s actual goal is to answer prompts appropriately, not to rank or score various answers to a given prompt. That is why this ranking dataset is used during the training of another model, called the reward model. Because this model learns from the rankings/scores of various answers, it learns to score the quality of an answer given a prompt. The reinforcement learning training then proceeds as follows:

GPT is fine-tuned with (prompts, answer) dataset and the reward model is trained.

A prompt is sampled from the prompt pool.

The chatbot model generates an answer to that prompt.

The reward model calculates a score/reward for that answer given the prompt.

The reward is used to update the chatbot model.

1-4 is repeated until a convergence criterion is reached.

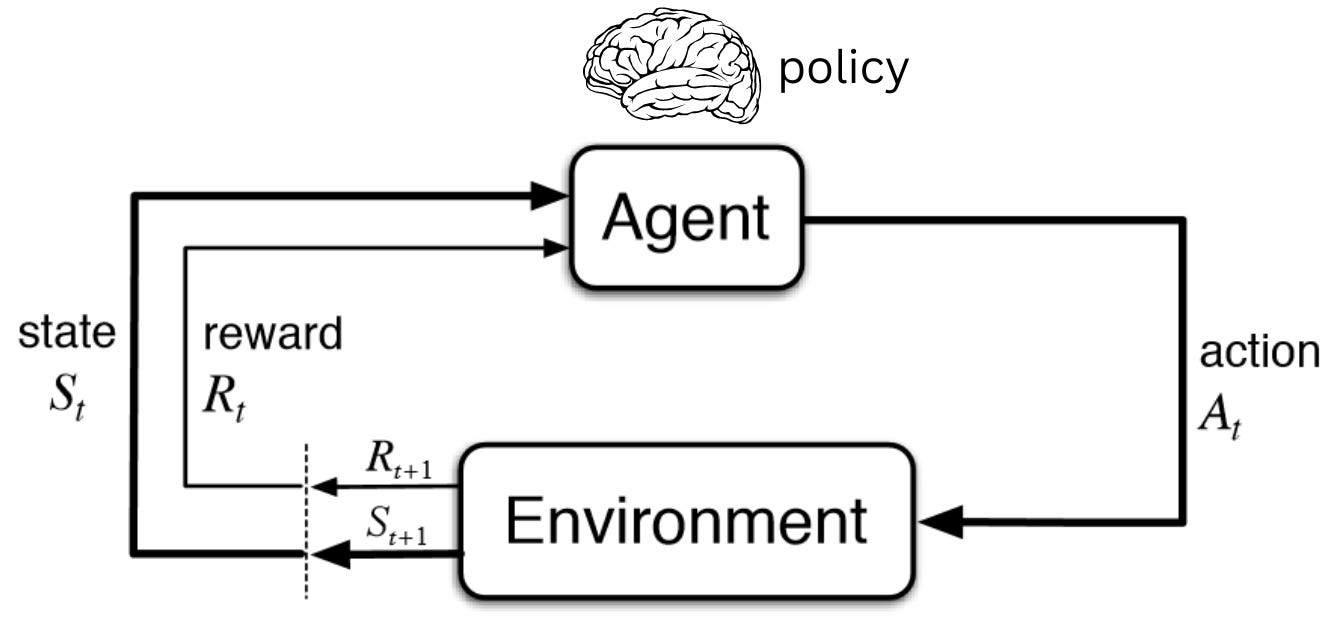

Connections to RL

In reinforcement learning, there is an agent who would like to find the optimal strategy (policy) under a situation. Optimality is defined as maximizing the rewards given by the environment. With each action that the agent takes, the state that the agent is in changes, and also, the agent is able to receive the resulting reward. Using the rewards it encounters given the states, the agent updates its policy, which can be thought of as the agent’s brain or strategy. The process continues until an optimal policy is found or some criterion is reached.

Let us connect the RLHF training process to a generic reinforcement learning process using RL lingo. The chatbot model is treated as the agent/policy. The rewards come from the reward model. The environment is a text-based environment where a random customer enters a prompt and expects a response from the chatbot. Actions are the outputs of the chatbot model. Because the policy is a neural network, updating it requires computing some gradients and performing backpropagation. The gradients are computed using a method called PPO (Proximal Policy Optimization ), which is a popular and relatively new policy-based optimization method. If you want to learn more about it, check out this video.

2.3. Size of the model

Even though it is not the main innovation behind ChatGPT, it is worth mentioning that as the size of the model increases, its increases.1415 ChatGPT is a huge model with its smallest version having 1.3 billion parameters and the largest version having 175 billion parameters. This combined with the techniques above allows it to generalize well.

3. Discussion & Conclusion

Discussion

As mentioned at the beginning of the text, ChatGPT is slightly different than InstructGPT, and the developers of ChatGPT state that they only made slight changes in the data collection process for ChatGPT. Since ChatGPT does not have an associated paper, the details we talked about throughout the blog post actually come from the paper of InstructGPT. Although there seems to be a significant overlap between the two in terms of the techniques used, I would like to speculate about the points in which ChatGPT differs from InstructGPT. The first difference is that InstructGPT is built upon GPT-3, whereas ChatGPT is built upon GPT-3.5. The remaining differences, as stated in the blog post, are likely to be about the data collection. Improving upon the data collection process might mean improving the dataset curated from the web for the fine-tuning process or improving data generated by human involvement.

Improving the crawled dataset for fine-tuning might mean that more resources with legitimate content have been found and curated on the internet. Improving the human-generated data might mean that each prompt is answered by and each answer might be ranked by multiple annotators. If this is the case, a method for combining feedback from multiple resources (rather than plain averaging) could have been used. Another interesting speculation would be that instead of using only humans for ranking, they could have used a debate protocol in which two language models interact with each other and the human decides which one makes more sense and is more intent-aligned.16 This way, the annotator could be an impartial judge because they did not participate in the discussion.

Let me know in the comments what you think could be the difference(s) between InstructGPT and ChatGPT!

Conclusion

ChatGPT is a powerful tool that will for sure inspire interesting lines of work. Its power lies mainly in three factors: i) the high-quality (sometimes human-generated) and large datasets it is trained on, ii) the training method it leverages (RLHF), and iii) the vast size of the model. These three components are put together beautifully to create a model we can have conversations with! There are still ethical and safety concerns to be considered, but overall I believe it is a great piece of technology!

It should also be mentioned that there are other powerful models that came out this year, such as Sparrow and Lamda. These models are not open to public use but they show tons of promise based on the results presented in the papers. Sparrow also uses reinforcement learning with feedback. One of the upcoming blog posts might be about how these models compare to each other, stay tuned!

Code Resources

Here are some open-source RLHF frameworks that you can refer to and even contribute to.

There are probably many more factors why ChatGPT is so successful, most of which are possibly subtle and known to the team who worked on it. Thus, this blog post contains my personal views on ChatGPT’s success. If you disagree or if you have something to add, please leave a comment and get the discussion going!

If OpenAI releases a paper about ChatGPT, I will write another blog post or update this post accordingly.

Check out my previous blog post if you want to learn more about these training paradigms!

One of the innovations behind the BERT model.

Malcolm Gladwell talks about how cultural legacies impact the way people communicate with each other with brilliant examples. Please read his book, Outliers, if you are interested!

Intent alignment and behavior alignment are actually different concepts. The difference is that behavioral alignment is a broader term and can be decomposed into intent & competence. Perfect behavior is not required for intent alignment. The only requirement is that the agent intends to behave in accordance with humans’ wishes. A competent agent that has intent alignment is behaviorally aligned. Refer to this post for more details.

Or at least, less popular

Idea from this paper

It is a great work. Thank you👏👏👏